Detectron2 – najdi medvěda

Detectron2 je framework vyvíjený a udržovaný firmou Facebook. Jedná se o nástroj pro detekci objektů a segmentaci obrázků, zahrnující implementaci mnoha modelů, např. RetinaNet, Mask R-CNN, Faster R-CNN a dalších. Navíc je součástí také široká škála modelů trénovaných na volně dostupných datových sadách v rámci tzv. Detectron2 Model Zoo.

Tak jsem si řekl, že si vyzkouším některé ze základních funkcí.

Instalace framework

Většinu svých pokusů dělám v prostředí Kaggle. Zde není tento framework k dispozici v rámci připraveného běhového prostření. Proto si jej musím doinstalovat. Naštěstí se jedná o relativně jednoduchý sled kroků.

Nejdříve si celý projekt stáhnu z GitHub:

!git clone 'https://github.com/facebookresearch/detectron2' Cloning into 'detectron2'... remote: Enumerating objects: 15792, done. remote: Counting objects: 100% (49/49), done. remote: Compressing objects: 100% (39/39), done. remote: Total 15792 (delta 15), reused 27 (delta 10), pack-reused 15743 (from 1) Receiving objects: 100% (15792/15792), 6.36 MiB | 26.27 MiB/s, done. Resolving deltas: 100% (11509/11509), done.

Dále potřebuji ještě doplnit závislosti specifikované v distribuci a zahrnou projekt do svého běhového prostředí:

import sys, os, distutils.core dist = distutils.core.run_setup("./detectron2/setup.py") !python -m pip install {' '.join([f"'{x}'" for x in dist.install_requires])} sys.path.insert(0, os.path.abspath('./detectron2')) Ignoring dataclasses: markers 'python_version < "3.7"' don't match your environment Requirement already satisfied: Pillow>=7.1 in /opt/conda/lib/python3.10/site-packages (10.3.0) Requirement already satisfied: matplotlib in /opt/conda/lib/python3.10/site-packages (3.7.5) Collecting pycocotools>=2.0.2 Downloading pycocotools-2.0.8-cp310-cp310-manylinux_2_17_x86_64.manylinux2014_x86_64.whl.metadata (1.1 kB) Requirement already satisfied: termcolor>=1.1 in /opt/conda/lib/python3.10/site-packages (2.4.0) Collecting yacs>=0.1.8 Downloading yacs-0.1.8-py3-none-any.whl.metadata (639 bytes) Requirement already satisfied: tabulate in /opt/conda/lib/python3.10/site-packages (0.9.0) Requirement already satisfied: cloudpickle in /opt/conda/lib/python3.10/site-packages (3.0.0) Requirement already satisfied: tqdm>4.29.0 in /opt/conda/lib/python3.10/site-packages (4.66.4) Requirement already satisfied: tensorboard in /opt/conda/lib/python3.10/site-packages (2.16.2) Collecting fvcore<0.1.6,>=0.1.5 Downloading fvcore-0.1.5.post20221221.tar.gz (50 kB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 50.2/50.2 kB 2.0 MB/s eta 0:00:00 Preparing metadata (setup.py) ... - done Collecting iopath<0.1.10,>=0.1.7 Downloading iopath-0.1.9-py3-none-any.whl.metadata (370 bytes) Collecting omegaconf<2.4,>=2.1 Downloading omegaconf-2.3.0-py3-none-any.whl.metadata (3.9 kB) Collecting hydra-core>=1.1 Downloading hydra_core-1.3.2-py3-none-any.whl.metadata (5.5 kB) Collecting black Downloading black-24.10.0-cp310-cp310-manylinux_2_17_x86_64.manylinux2014_x86_64.manylinux_2_28_x86_64.whl.metadata (79 kB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 79.2/79.2 kB 4.7 MB/s eta 0:00:00 Requirement already satisfied: packaging in /opt/conda/lib/python3.10/site-packages (21.3) Requirement already satisfied: contourpy>=1.0.1 in /opt/conda/lib/python3.10/site-packages (from matplotlib) (1.2.1) Requirement already satisfied: cycler>=0.10 in /opt/conda/lib/python3.10/site-packages (from matplotlib) (0.12.1) Requirement already satisfied: fonttools>=4.22.0 in /opt/conda/lib/python3.10/site-packages (from matplotlib) (4.53.0) Requirement already satisfied: kiwisolver>=1.0.1 in /opt/conda/lib/python3.10/site-packages (from matplotlib) (1.4.5) Requirement already satisfied: numpy<2,>=1.20 in /opt/conda/lib/python3.10/site-packages (from matplotlib) (1.26.4) Requirement already satisfied: pyparsing>=2.3.1 in /opt/conda/lib/python3.10/site-packages (from matplotlib) (3.1.2) Requirement already satisfied: python-dateutil>=2.7 in /opt/conda/lib/python3.10/site-packages (from matplotlib) (2.9.0.post0) Requirement already satisfied: PyYAML in /opt/conda/lib/python3.10/site-packages (from yacs>=0.1.8) (6.0.2) Requirement already satisfied: absl-py>=0.4 in /opt/conda/lib/python3.10/site-packages (from tensorboard) (1.4.0) Requirement already satisfied: grpcio>=1.48.2 in /opt/conda/lib/python3.10/site-packages (from tensorboard) (1.62.2) Requirement already satisfied: markdown>=2.6.8 in /opt/conda/lib/python3.10/site-packages (from tensorboard) (3.6) Requirement already satisfied: protobuf!=4.24.0,>=3.19.6 in /opt/conda/lib/python3.10/site-packages (from tensorboard) (3.20.3) Requirement already satisfied: setuptools>=41.0.0 in /opt/conda/lib/python3.10/site-packages (from tensorboard) (70.0.0) Requirement already satisfied: six>1.9 in /opt/conda/lib/python3.10/site-packages (from tensorboard) (1.16.0) Requirement already satisfied: tensorboard-data-server<0.8.0,>=0.7.0 in /opt/conda/lib/python3.10/site-packages (from tensorboard) (0.7.2) Requirement already satisfied: werkzeug>=1.0.1 in /opt/conda/lib/python3.10/site-packages (from tensorboard) (3.0.4) Collecting portalocker (from iopath<0.1.10,>=0.1.7) Downloading portalocker-2.10.1-py3-none-any.whl.metadata (8.5 kB) Collecting antlr4-python3-runtime==4.9.* (from omegaconf<2.4,>=2.1) Downloading antlr4-python3-runtime-4.9.3.tar.gz (117 kB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 117.0/117.0 kB 8.3 MB/s eta 0:00:00 Preparing metadata (setup.py) ... - done Requirement already satisfied: click>=8.0.0 in /opt/conda/lib/python3.10/site-packages (from black) (8.1.7) Requirement already satisfied: mypy-extensions>=0.4.3 in /opt/conda/lib/python3.10/site-packages (from black) (1.0.0) Collecting packaging Downloading packaging-24.1-py3-none-any.whl.metadata (3.2 kB) Collecting pathspec>=0.9.0 (from black) Downloading pathspec-0.12.1-py3-none-any.whl.metadata (21 kB) Requirement already satisfied: platformdirs>=2 in /opt/conda/lib/python3.10/site-packages (from black) (3.11.0) Requirement already satisfied: tomli>=1.1.0 in /opt/conda/lib/python3.10/site-packages (from black) (2.0.1) Requirement already satisfied: typing-extensions>=4.0.1 in /opt/conda/lib/python3.10/site-packages (from black) (4.12.2) Requirement already satisfied: MarkupSafe>=2.1.1 in /opt/conda/lib/python3.10/site-packages (from werkzeug>=1.0.1->tensorboard) (2.1.5) Downloading pycocotools-2.0.8-cp310-cp310-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (427 kB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 427.8/427.8 kB 20.8 MB/s eta 0:00:00 Downloading yacs-0.1.8-py3-none-any.whl (14 kB) Downloading iopath-0.1.9-py3-none-any.whl (27 kB) Downloading omegaconf-2.3.0-py3-none-any.whl (79 kB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 79.5/79.5 kB 5.9 MB/s eta 0:00:00 Downloading hydra_core-1.3.2-py3-none-any.whl (154 kB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 154.5/154.5 kB 11.1 MB/s eta 0:00:00 Downloading black-24.10.0-cp310-cp310-manylinux_2_17_x86_64.manylinux2014_x86_64.manylinux_2_28_x86_64.whl (1.8 MB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 1.8/1.8 MB 61.8 MB/s eta 0:00:00 Downloading packaging-24.1-py3-none-any.whl (53 kB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 54.0/54.0 kB 3.6 MB/s eta 0:00:00 Downloading pathspec-0.12.1-py3-none-any.whl (31 kB) Downloading portalocker-2.10.1-py3-none-any.whl (18 kB) Building wheels for collected packages: fvcore, antlr4-python3-runtime Building wheel for fvcore (setup.py) ... - \ done Created wheel for fvcore: filename=fvcore-0.1.5.post20221221-py3-none-any.whl size=61400 sha256=1174d73e7b579fa1c5ba10f365a2b2971ad1b26d7047828415312600d5ca4d30 Stored in directory: /root/.cache/pip/wheels/01/c0/af/77c1cf53a1be9e42a52b48e5af2169d40ec2e89f7362489dd0 Building wheel for antlr4-python3-runtime (setup.py) ... - \ done Created wheel for antlr4-python3-runtime: filename=antlr4_python3_runtime-4.9.3-py3-none-any.whl size=144554 sha256=9281d644c7734edb7bae58e7fa293b79c2f7314f1a7d2d41350c45f9cd92845d Stored in directory: /root/.cache/pip/wheels/12/93/dd/1f6a127edc45659556564c5730f6d4e300888f4bca2d4c5a88 Successfully built fvcore antlr4-python3-runtime Installing collected packages: antlr4-python3-runtime, yacs, portalocker, pathspec, packaging, omegaconf, iopath, hydra-core, black, pycocotools, fvcore Attempting uninstall: packaging Found existing installation: packaging 21.3 Uninstalling packaging-21.3: Successfully uninstalled packaging-21.3 ERROR: pip's dependency resolver does not currently take into account all the packages that are installed. This behaviour is the source of the following dependency conflicts. cudf 24.8.3 requires cubinlinker, which is not installed. cudf 24.8.3 requires cupy-cuda11x>=12.0.0, which is not installed. cudf 24.8.3 requires ptxcompiler, which is not installed. cuml 24.8.0 requires cupy-cuda11x>=12.0.0, which is not installed. dask-cudf 24.8.3 requires cupy-cuda11x>=12.0.0, which is not installed. cudf 24.8.3 requires cuda-python<12.0a0,>=11.7.1, but you have cuda-python 12.6.0 which is incompatible. distributed 2024.7.1 requires dask==2024.7.1, but you have dask 2024.9.1 which is incompatible. google-cloud-bigquery 2.34.4 requires packaging<22.0dev,>=14.3, but you have packaging 24.1 which is incompatible. jupyterlab 4.2.5 requires jupyter-lsp>=2.0.0, but you have jupyter-lsp 1.5.1 which is incompatible. jupyterlab-lsp 5.1.0 requires jupyter-lsp>=2.0.0, but you have jupyter-lsp 1.5.1 which is incompatible. libpysal 4.9.2 requires shapely>=2.0.1, but you have shapely 1.8.5.post1 which is incompatible. rapids-dask-dependency 24.8.0a0 requires dask==2024.7.1, but you have dask 2024.9.1 which is incompatible. ydata-profiling 4.10.0 requires scipy<1.14,>=1.4.1, but you have scipy 1.14.1 which is incompatible. Successfully installed antlr4-python3-runtime-4.9.3 black-24.10.0 fvcore-0.1.5.post20221221 hydra-core-1.3.2 iopath-0.1.9 omegaconf-2.3.0 packaging-24.1 pathspec-0.12.1 portalocker-2.10.1 pycocotools-2.0.8 yacs-0.1.8

Se závislostmi jsou trochu problémy. Ty jsem ale aktuálně neřešil, neboť mně žádné nepříjemnosti nedělaly.

A nyní se již můžu podívat, co mám aktuálně nainstalované:

import torch, detectron2 !nvcc --version TORCH_VERSION = ".".join(torch.__version__.split(".")[:2]) CUDA_VERSION = torch.__version__.split("+")[-1] print("torch: ", TORCH_VERSION, "; cuda: ", CUDA_VERSION) print("detectron2:", detectron2.__version__) nvcc: NVIDIA (R) Cuda compiler driver Copyright (c) 2005-2023 NVIDIA Corporation Built on Wed_Nov_22_10:17:15_PST_2023 Cuda compilation tools, release 12.3, V12.3.107 Build cuda_12.3.r12.3/compiler.33567101_0 torch: 2.4 ; cuda: 2.4.0 detectron2: 0.6

Pro běh framework potřebuji mít k dispozici podporu grafické karty a nainstalovaný Cuda driver. Bez podpory grafické karty mně Torch nefungoval.

Detekce objektu a segmentace

Jedním ze základních úkolů, které si autoři framework vytyčili, je detekce objektů nacházejících se na obrázku. Cílem je najít známý objekt , což může být osoba, auto, zvíře, strom a podobně, a označit jej pomocí ohraničujícího obdélníku. Aby bylo možné toho dosáhnout, je potřeba trénovat model na nějaké datové sadě, která obsahuje informace o třídách objektu a jejich lokalizaci pomocí obdélníku. Jednou z takových sad je COCO – Common Objects in Context, na které jsou trénovány modely z framework Detectron2. A to mi hodně ulehčí pokusy, neboť můžu použít takový připravený model.

Segmentací se rozumí druhý úkol, který si autoři vzali za své. Když už jsem nějaký objekt na obrázku našel, pak bych chtěl označit všechny body, které tento objekt tvoří (tedy nejen namalovat kolem něj obdélník). V tomto kontextu se obvykle mluví o segmentaci instancí (chci označit každý objekt zvláště, např. odlišit každou osobu na obrázku), nebo sémantické segmentaci, kdy označuji body vybrané třídy (např. stromy souhrnně a ne po jednotlivých kmenech).

Nejdříve příprava prostředí pro test:

import detectron2 from detectron2.utils.logger import setup_logger setup_logger() import cv2, random import matplotlib.pyplot as plt from detectron2 import model_zoo from detectron2.engine import DefaultPredictor from detectron2.config import get_cfg from detectron2.utils.visualizer import Visualizer from detectron2.data import MetadataCatalog, DatasetCatalog

Dále potřebuji nějaký obrázek, tak jsem si jeden stáhl:

!wget http://farm8.staticflickr.com/7422/9543633506_36574fe48f_z.jpg -q -O input.jpg im = cv2.imread("./input.jpg") fig=plt.figure(figsize=(14, 6)) plt.imshow(im[:,:,::-1]) plt.show()

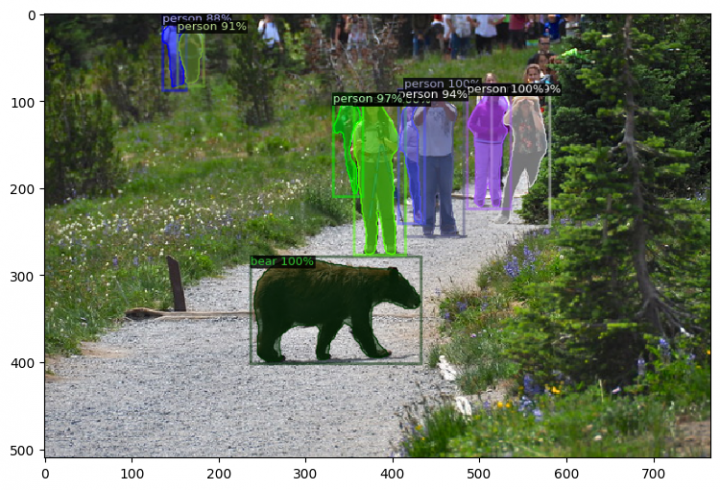

Jako výchozí model pro analýzu obrázku jsem si zvolil model „ COCO-InstanceSegmentation/mask_rcnn_R50_FPN_3×.yaml“. Ten by mně měl poskytnout jak detekci objektů, tak také segmentaci jednotlivých instancí.

Nejdříve si musím vytvořit objekt s konfiguračními parametry. Ty následně aktualizuji o parametry specifické pro vybraný model. Posledním krokem vytvoření finální konfigurace je načtení vah modelu (to je ten parametru cfg.MODEL.WEIGHTS).

Když už mám konfigurační objekt hotový, můžu podle něj vytvořit predictor. Jeho úkolem je analýza zadaného obrázku a vytvoření výstupního objektu s informací o nalezených objektech.

cfg = get_cfg() cfg.merge_from_file(model_zoo.get_config_file("COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_3x.yaml")) cfg.MODEL.ROI_HEADS.SCORE_THRESH_TEST = 0.5 cfg.MODEL.WEIGHTS = model_zoo.get_checkpoint_url("COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_3x.yaml") predictor = DefaultPredictor(cfg) outputs = predictor(im) [10/24 18:27:22 d2.checkpoint.detection_checkpoint]: [DetectionCheckpointer] Loading from https://dl.fbaipublicfiles.com/detectron2/COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_3x/137849600/model_final_f10217.pkl ... model_final_f10217.pkl: 178MB [00:00, 179MB/s]

Nyní již mám analýzu hotovou a můžu se podívat na její výsledek.

Zobrazil jsem si jenom dva atributy (v objektu jich je více), a sice pred_classes a pred_boxes. V tom prvém případě se jedná o seznam objektů, které byly na obrázku nalezeny. Model našel pouze dvě třídy objektů, 0 – osoba a 21 – medvěd. Druhý atribut pak obsahuje souřadnice obdélníků lokalizujících každou nalezenou instanci.

print(outputs["instances"].pred_classes) print(outputs["instances"].pred_boxes) tensor([ 0, 0, 21, 0, 0, 0, 0, 0, 0], device='cuda:0') Boxes(tensor([[345.0279, 62.7153, 401.5158, 213.6461], [296.4480, 77.5313, 345.7865, 230.9989], [197.9029, 232.5715, 360.9186, 335.1725], [405.3865, 67.1518, 446.8349, 186.6909], [427.9221, 67.8704, 483.9040, 203.5692], [276.9014, 76.5971, 305.0749, 175.0133], [339.2955, 72.9099, 363.9201, 201.0358], [127.4966, 7.7133, 152.6817, 69.1629], [113.0532, 0.0000, 136.0884, 73.3390]], device='cuda:0'))

S výsledky mohu dále pracovat dle svého uvážení a potřeb. Nebo si je můžu zobrazit pomocí nástroje Visualizer zahrnutého do framework:

v = Visualizer(im, MetadataCatalog.get(cfg.DATASETS.TRAIN[0]), scale=1.2) out = v.draw_instance_predictions(outputs["instances"].to("cpu")) fig=plt.figure(figsize=(14, 6)) plt.imshow(out.get_image()[:,:,::-1]) plt.show()

V tomto zobrazení můžete vidět několik informací. U každého nalezeného objektu je uvedena jeho třída, pravděpodobnost určení třídy, lokalizační obdélník, a díky tomu, že jsem použil model se segmentací, tak také segmentační masku každé instance objektu.

Pokud by mne tedy zajímal pouze medvěd s jeho lokalizací, pak bych zobrazení mohl zjednodušit třeba takto:

out = outputs['instances'] inst = out[out.pred_classes == 21] inst.remove('pred_masks') v = Visualizer(im, MetadataCatalog.get(cfg.DATASETS.TRAIN[0]), scale=1.2) out = v.draw_instance_predictions(inst.to("cpu")) fig=plt.figure(figsize=(14, 6)) plt.imshow(out.get_image()[:,:,::-1]) plt.show()

A mám pouze toho medvěda.

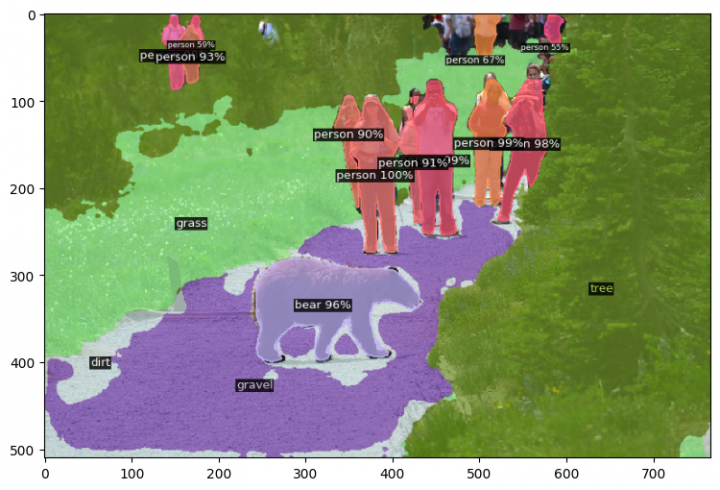

Panoptická segmentace

Jako ukázku toho, jak volba typu modelu ovlivňuje výsledek analýzy, jsem si vybral ještě druhý model. Ten by mně měl poskytnout tzv. „panoptickou segmentaci“. Jedná se o kombinaci segmentace vybraných instancí se sémantickou segmentací. Příklad asi bude názornější než nějaké složité vysvětlování.

Vyšel jsem z modelu „ COCO-PanopticSegmentation/panoptic_fpn_R101_3×.yaml“:

cfg = get_cfg() cfg.merge_from_file(model_zoo.get_config_file("COCO-PanopticSegmentation/panoptic_fpn_R_101_3x.yaml")) cfg.MODEL.ROI_HEADS.SCORE_THRESH_TEST = 0.5 # set threshold for this model cfg.MODEL.WEIGHTS = model_zoo.get_checkpoint_url("COCO-PanopticSegmentation/panoptic_fpn_R_101_3x.yaml") predictor = DefaultPredictor(cfg) panoptic_seg, segments_info = predictor(im)["panoptic_seg"] v = Visualizer(im, MetadataCatalog.get(cfg.DATASETS.TRAIN[0]), scale=1.2) out = v.draw_panoptic_seg_predictions(panoptic_seg.to("cpu"), segments_info) fig=plt.figure(figsize=(14, 6)) plt.imshow(out.get_image()) plt.show() [10/24 18:27:27 d2.checkpoint.detection_checkpoint]: [DetectionCheckpointer] Loading from https://dl.fbaipublicfiles.com/detectron2/COCO-PanopticSegmentation/panoptic_fpn_R_101_3x/139514519/model_final_cafdb1.pkl ... model_final_cafdb1.pkl: 261MB [00:06, 41.9MB/s]

Těch různých typů modelů je v rámci ZOO více. Zájemce tedy odkazuji na shlédnutí domovské stránky.

Jiří Raška

pracuje na pozici IT architekta. Poslední roky se zaměřuje na integrační a komunikační projekty ve zdravotnictví. Mezi jeho koníčky patří také paragliding a jízda na horském kole.

Nejčtenější články autora

-

Mravenčí kolonií na Nurikabe

Přečteno 32 549×

-

AutoEncoder na ořechy

Přečteno 28 916×

-

Klasifikace EKG křivek - výlet do světa neuronových sítí

Přečteno 26 453×

-

Detekce anomálií v auditních záznamech - časové řady

Přečteno 24 275×

-

Detekce anomálií v auditních záznamech

Přečteno 20 031×

Poslední názory

-

\

Re: Detectron2 – hra na špióny

ke článku Detectron2 – hra na špióny -

\

Re: Detectron2 – najdi medvěda

ke článku Detectron2 – najdi medvěda -

\

Re: Mravenčí kolonií na Nurikabe

ke článku Mravenčí kolonií na Nurikabe -

\

Re: AutoEncoder na ořechy

ke článku AutoEncoder na ořechy -

\

Re: Rozpoznání zápalu plic z RTG snímků - ViT model

ke článku Rozpoznání zápalu plic z RTG snímků - ViT model